Blog Archives

Oracle Bested the Best in Quality

I have been an avid reader of SearchStorage Storage magazine for many years now and have been downloading their free PDF copy every month. Quietly snugged at the end of January 2012’s issue, there it was, the Storage magazine 6th annual Quality Awards for NAS.

I was pleasantly surprised with the results because in the previous annual awards, it would dominated by NetApp and EMC but this time around, a dark horse has emerged. It is Oracle who took top honours in both the Enterprise and the Mid-range categories.

The awards are the result of Storage Magazine’s survey and below is an excerpt about the survey:

In both categories covering the Enterprise and the Mid-Range, the overall ratings are shown below:

Surprised? You bet because I was.

The survey does not focus on speeds and feeds or comparing scalability or performance. Rather, the survey focuses on the qualitative aspects of the NAS products. There were many storage vendors who were part of the participation lists but many did not qualify to be make a dent of what the top 6 did. Here’s a list of the vendors surveyed:

The qualitative aspects of the survey focused on 5 main areas:

- Sales force competency

- Initial Quality

- Product Features

- Product Reliability

- Technical Support

In each of the 5 main areas, customers were asked a series of questions. Here is a breakdown of those questions of each area.

Sales Force Competency

- Are the sales force knowledgeable about their products and their customer’s industries?

- How flexible are their sales effort?

- How good are they keeping the customer’s interest levels up?

Initial Product Quality

- Does the product need little or no vendor intervention?

- Ease of installation and ease of use

- Good value for money

- Reasonable requirement from Professional Service or needing little Professional Service

- Installation without defects

- Getting it right the first time

Product Features

- Storage management features

- Mirroring features

- Capacity scaling features

- Interoperable with other vendor’s products

- Remote replication features

- Snapshotting features

Product Reliability

- Vendor provide comprehensive upgrading procedures

- Ability to meet Service Level Agreement (SLA)

- Experiences very little downtime

- Patches applied non-disruptively

Technical Support

- Taking ownership of the customer’s problem

- Timely problem resolution and technical advice

- Documentation

- Vendor supplies support contractually as specified

- Vendor’s 3rd party partners are knowledgeable

- Vendor provide adequate training

These are some of the intangibles that customers are looking for when they qualify the NAS solutions from vendors. And the surprising was Oracle just became something to be reckoned with, backed by the strong legacy of customer-centric focus of Sun and StorageTek. If this is truly happening in the US, then kudos to Oracle for maximizing the Sun-Storagetek enterprise genes to put their NAS products to be best-of-breed.

However, on the local front, it seems to me that Oracle isn’t doing much justice to the human potential they have inherited from Sun. A little bird has told me that they got rid of some good customer service people in Malaysia and Singapore just last month and more could be on the way in 2012. All this for the sake of meeting some silly key performance indices (KPIs) of being measured by tasks per day.

The Sun people that I know here in Malaysia and Singapore are gurus who has gone through the fire and thrived and there is no substitute for quality. Unfortunately, in Oracle, it’s all about numbers, whether it is sales or tasks per day.

Well, back to the survey. And of course, the final question would be, “Is the product good enough that you would buy it again?” And the results are …

Good for Oracle in the US but the results do not fully reflect what’s on the ground here in Malaysia, which is more likely dominated by NetApp, HP, EMC and IBM.

Primary Dedupe where are you?

I am a bit surprised that primary storage deduplication has not taken off in a big way, unlike the times when the buzz of deduplication first came into being about 4 years ago.

When the first deduplication solutions first came out, it was particularly aimed at the backup data space. It is now more popularly known as secondary data deduplication, the technology has reduced the inefficiencies of backup and helped sparked the frenzy of adulation of companies like Data Domain, Exagrid, Sepaton and Quantum a few years ago. The software vendors were not left out either. Symantec, Commvault, and everyone else in town had data deduplication for backup and archiving.

It was no surprise that EMC battled NetApp and finally won the rights to acquire Data Domain for USD$2.4 billion in 2009. Today, in my opinion, the landscape of secondary data deduplication has pretty much settled and matured. Practically everyone has some sort of secondary data deduplication technology or solution in place.

But then the talk of primary data deduplication hardly cause a ripple when compared a few years ago, especially here in Malaysia. Yeah, the IT crowd is pretty fickle that way because most tend to follow the trend of the moment. Last year was Cloud Computing and now the big buzz word is Big Data.

We are here to look at technologies to solve problems, folks, and primary data deduplication technology solutions should be considered in any IT planning. And it is our job as storage networking professionals to continue to advise customers about what is relevant to their business and addressing their pain points.

I get a bit cheesed off that companies like EMC, or HDS continue to spend their marketing dollars on hyping the trends of the moment rather than using some of their funds to promote good technologies such as primary data deduplication that solve real life problems. The same goes for most IT magazines, publications and other communications mediums, rarely giving space to technologies that solves problems on the ground, and just harping on hypes, fuzz and buzz. It gets a bit too ordinary (and mundane) when they are trying too hard to be extraordinary because everyone is basically talking about the same freaking thing at the same time, over and over again. (Hmmm … I think I am speaking off topic now .. I better shut up!)

We are facing an avalanche of data. The other day, the CEO of Nexenta used the word “data tsunami” but whatever terms used do not matter. There is too much data. Secondary data deduplication solved one part of the problem and now it’s time to talk about the other part, which is data in primary storage, hence primary data deduplication.

What is out there? Who’s doing what in term of primary data deduplication?

NetApp has their A-SIS (now NetApp Dedupe) for years and they are good in my books. They talk to customers about the benefits of deduplication on their FAS filers. (Side note: I am seeing more benefits of using data compression in primary storage but I am not going to there in this entry). EMC has primary data deduplication in their Celerra years ago but they hardly talk much about it. It’s on their VNX as well but again, nobody in EMC ever speak about their primary deduplication feature.

I have always loved Ocarina Networks ECO technology and Dell don’t give much hoot about Ocarina since the acquisition in 2010. The technology surfaced a few months ago in Dell DX6000G Storage Compression Node for its Object Storage Platform, but then again, all Dell talks about is their Fluid Data Architecture from the Compellent division. Hey Dell, you guys are so one-dimensional! Ocarina is a wonderful gem in their jewel case, and yet all their storage guys talk about are Compellent and EqualLogic.

Moving on … I ought to knock Oracle on the head too. ZFS has great data deduplication technology that is meant for primary data and a couple of years back, Greenbytes took that and made a solution out of it. I don’t follow what Greenbytes is doing nowadays but I do hope that the big wave of primary data deduplication will rise for companies such as Greenbytes to take off in a big way. No thanks to Oracle for ignoring another gem in ZFS and wasting their resources on pre-sales (in Malaysia) and partners (in Malaysia) that hardly know much about the immense power of ZFS.

But an unexpected source coming from Microsoft could help trigger greater interest in primary data deduplication. I have just read that the next version of Windows Server OS will have primary data deduplication integrated into NTFS. The feature will be available in Windows 8 and the architectural view is shown below:

The primary data deduplication in NTFS will be a feature add-on for Windows Server users. It is implemented as a filter driver on a per volume basis, with each volume a complete, self describing unit. It is cluster aware, and fully crash consistent on all operations.

The technology is Microsoft’s own technology, built from scratch and will be working to position Hyper-V as an strong enterprise choice in its battle for the server virtualization space with VMware. Mind you, VMware already has a big, big lead and this is just something that Microsoft must do-or-die to keep Hyper-V playing catch-up. Otherwise, the gap between Microsoft and VMware in the server virtualization space will be even greater.

I don’t have the full details of this but I read that the NTFS primary deduplication chunk sizes will be between 32KB to 128KB and it will be post-processing.

With Microsoft introducing their technology soon, I hope primary data deduplication will get some deserving accolades because I think most companies are really not doing justice to the great technologies that they have in their jewel cases. And I hope Microsoft, with all its marketing savviness and adeptness, will do some justice to a technology that solves real life’s data problems.

I bid you good luck – Primary Data Deduplication! You deserved better.

“Ugly Yellow Box” bought by private equity firm

Security is BIG business, probably even bigger than storage and with more “sex” appeal and pazzazz! My friends are owners of 2 of the biggest security distributors in town, so I know. I am not much of a security guy, but I reason I write about Bluecoat is that this company has something close to my heart.

In the early 2000, NetApp used to have a separate division that is not storage. They have a product called NetCache, which is a web proxy solution. It was a pretty decent product and one of the competitors we frequently encounter on the field was an “ugly yellow box” called CacheFlow. Whenever we see an “ugly yellow box” in a rack, we will immediately know that it was a CacheFlow box. NetApp competed strongly with Cache Flow, partly because their CEO and founder, Brian NeSmith, as we NetAppians were told, was ex-NetApp. And there was some animosity between Brian and NetApp, up to the point that I recalled NetApp’s CEO then, Dan Warmenhoven, declaring that “NetApp will bury CacheFlow!“, or something of that nature. At that point, in the circa of 2001-2002, CacheFlow was indeed in a bit of a rut as well. They suffered heavy losses and was near bankcruptcy. A old news from Forbes confirmed Brian NeSmith’s near-bankcruptcy adventure.

CacheFlow survived the rut, changed their name to Bluecoat Systems, and changed their focus from Internet caching to security. Know why they are know as “Bluecoat”? They are the policemen of the Internet, and policemen are men in blue coats. I found an old article from Network World about their change. And they decided not to paint their boxes yellow anymore. 😉

Eventually, it was CacheFlow who triumphed over NetApp. And the irony was NetApp eventually sold the NetCache unit and its technology to BlueCoat in 2006. And hence, that my account of the history of Bluecoat.

Yesterday, Bluecoat was on the history books again, but for a better reason. A private equity firm, Thoma Bravo, has put in USD$1.3 billion to acquire Bluecoat. News here and here.

Have a happy Sunday 😀

Gartner 3Q2011 WW ECB Disk Storage Market

Just after IDC released their numbers of their worldwide Disk Storage System Tracker (Read my blog) 10 days ago, Gartner released their Worldwide External Controller Based (ECB) Disk Storage Market report for Q3 of 2011.

The storage market remains resilient (for now) and growing 10.4% in terms of revenue, despite the hard economic conditions. The table below shows the top 7 storage vendors and their relation to their Q2 numbers.

EMC remained at the top and gained a massive 3.6% jump in market share. Looks like they are firing all cylinders and chugging like an unstoppable steam train. IBM gained 0.1% in second place as its stable of DS8000, XIV and Storewize V7000 is taking shape. Even though IBM has been holding steadily, I still think that their present storage lineup is staggered and lacks that seamless upgrade path for their customers.

NetApp, which I always terms as the “little engine that could”, is slowing down. They were badly hit in the last quarter, delivering lower than expected revenue numbers according to the analysts. Their stock took a tumble too. As quoted by Gartner, “NetApp’s third-quarter results reflect an overdependence on a few large customers, limited geographic coverage in high-growth countries and increased competition from Dell, EMC, HP and IBM in the midrange modular ECB disk array market segment.”

I wrote in my recent blog, that NetApp has to start evolving from a pure-play storage vendor into a total storage and data management solution vendor. The recent rumours of NetApp’s interests in Commvault and Quantum should make a lot of sense if NetApp decides to make that move. Come on, NetApp! What are you waiting for?

HP came back strong in this report. They are in 4th place with 10.4% market share and hot on NetApp’s heels. After many months of nonsensical madness – Leo Apotheker firing, trying to ditch the PC business, the killing of WebOS tablet, the very public Oracle-HP spat – things are beginning to settle a bit under their new CEO, Meg Whitman. In a recent HP Discover conference in Vienna, it was reported that the HP storage team is gung-ho of what they have in their arsenal right now. They called it “The 4 Jewels of HP Storage Crown” which includes 3PAR, Ibrix, StoreOnce and LeftHand. They also leap-frogged over HDS and Dell in the recent Gartner Magic Quadrant (See below).

Kudos to HP and team.

HDS seems to be doing well, and so is Dell. But the Gartner numbers tell a different story. HDS, lost market share and now shares 7.8% market share with Dell. Dell, despite its strong marketing on Compellent, could not make up its loss after breaking off with EMC.

Fujitsu and Oracle completes the line up.

My conclusion: HP and IBM are coming back; EMC is well and far ahead of everyone else; NetApp has to evolve; Dell still lacking in enterprise storage savviness despite having good technology; No comments about HDS.

Magic on storage players

It’s that time of the year again where Gartner releases it Magic Quadrant for the block-access, external controller-based, mid-range and high-end modular disk arrays market. This particular is very important because it represents the mainstay of the overall storage industry, viewed from a more qualitative angle. Whereas the other charts and reports work with statistics and numbers, this is the chart that everyone in the industry flock to. Gartner Magic Quadrant (MQ) is the storage industry indicator of who’s are the leaders; who are the visionaries; who are the executive wizards and who are the laggards (also known as niche players).

So, this time around, who’s in the Leaders Quadrant?

The perennial players in the Leader’s Quadrant are EMC, IBM, NetApp, HP, Dell, and HDS. In my previous blog, I shared with you the IDC figures about market shares but the Gartner MQ shows are more subtle side, and one that perhaps carry more weight to organizations.

From the IDC numbers announced previously, we have seen Dell taking a beating. They have lost market share and similarly in this latest Gartner MQ, they have lost their significance of their influence as well. Everyone expected their Compellent solution to be robust and having EqualLogic, Ocarina and Exanet in its stable would strengthen their presence in the storage industry. Surprisingly, Dell lost on both IDC statistically charged market numbers and this Gartner MQ as well. Perhaps they were too hasty to dump EMC a few months ago?

Gartner also reported that HP has made significant leap in the Leader’s Quadrant. It has leapfrogged over HDS and IBM when comparing their position in Gartner’s MQ chart. This could be coming from their concerted effort to pitch their Converged Infrastructure, a vision that in my opinion, simplified computing. HP Malaysia shared with me their vision a few months ago, and I was impressed. What I was not very impressed then and even now, is that their storage solutions story is still staggered, lacking the gel. Perhaps it is work in progress for HP, the 3PAR, the IBRIX and the EVA. But one things for sure. They are slowly but surely getting the StoreOnce story right and that’s good news for customers. I did a review of HP StoreOnce technology a few months ago.

Perhaps it’s time for HP to ditch their VLS deduplication, which to me, confuses customers. By the way, HP VLS is an OEM from Sepaton. (Sepaton is “No tapes” spelled backwards)

Here’s a glimpse of last year’s Magic Quadrant.

In the Niche Quadrant, there are a few players making waves as well. 2 companies to watch out for are Huawei (they dropped Symantec 2 weeks ago) and Nexsan. Nexsan has been beefing up its marketing of late, and I often see them in mailing lists and ads on some websites I went to.

But the one to watch will be Huawei. This is a company with deep pockets, hiring the best in the storage industry and also has a very strong domestic market in China. In the next 2-3 years, Huawei could emerge as a strong contender to the big boys. So watch out!

Gartner Magic Quadrant is indeed weaving its magic and this time around the magic is good to HP.

Crisis? What crisis?

The storage train is still chugging hard and fast as IDC just released its Worldwide Disk Storage System Tracker for 3Q11. Despite the economic climate, the storage market posted a strong 8.5% revenue growth and a whopping 30.7% growth in terms of petabytes shipped. In total, 5,429PB were shipped in Q3.

So how did everyone do in this latest Tracker report?

In the Worldwide Total External Disk Storage Systems, EMC is still holding on to the #1 position, with 28.6%. IBM and NetApp came in at 12.7% and 12.1% respectively. The table below summarizes the percentage view of the top storage players, in terms of revenue.

From the table, everyone benefited from the strong buying of storage in the last quarter. EMC gained a strong market gain of almost 3%, while everyone else either gained or lost less than 1% market share. But the more interesting numbers are not from the market share column but the % growth column.

HDS posted the strongest growth of 22.1%, slightly higher than EMC of 22.0%. HDS is beginning to get their story right, putting the right storage solutions in place, and has been strongly focused in their services offering as well. That’s simply great news for HDS because this is a company is not known for their marketing and advertising. The Japanese “culture” within HDS probably has taught it to be prudent but to see HDS growing faster than the big boys like IBM and HP is something their competitors should respect. I believe customers are beginning to see the true potential of HDS.

As for EMC, everyone labels them as the 800-pound gorilla but they have been very nimble and strong in the storage market for many quarters. This is due to the strong management team headed by Joe Tucci and his heir-in-waiting, Pat Gelsinger. Several of their acquisitions are doing well, with the likes of Isilon, Greenplum, Data Domain, and of course VMware. Even though VMware does not contribute the EMC revenue numbers, the very fact that EMC owns more than 80% of VMware has already given EMC a lot of credibility in the storage battlefield. They are certainly going great guns.

NetApp took a hit in the last quarter, when they missed the street revenue numbers last quarter. Their stock took a beating and there were rumours in the market that NetApp might acquire Commvault and Quantum to compete with EMC. EMC has been able to leverage the list of companies and acquired solutions very well, from data protection solutions like Networker and Avamar, deduplication solutions like Data Domain and Avamar, Documentum for content management and so on, while NetApp has been, for the longest time, prefer a more “loosely-coupled” approach with their partners for a more complete solution set.

Other interesting reports from IDC are the Open SAN/NAS market, the NAS market and the iSCSI market.

The Open SAN/NAS market combination, according to IDC goes like this:

| EMC | 31.3% |

| NetApp | 14.4% |

In the NAS only market, EMC and Isilon (under the one EMC umbrella) competes with NetApp and the table is like this:

| EMC | 46.7% |

| NetApp | 30.7% |

The iSCSI only market is led by Dell (EqualLogic and Compellent combined), followed by EMC and IBM. Here’s the summarized table:

| Dell | 30.3% |

| EMC | 19.2% |

| IBM | 14.0% |

The strong growth is indeed good news as the storage market continues to weather the economic crisis storm. I have been saying this all along. The storage market in IT is still the growth engine as data keeps growing and growing, even though it was never the darling of the IT industry. Let’s hope the trend continues.

NetApp to buy Commvault?

The rumour mill is going again that Commvault is an acquisition target, and this time, NetApp. The rumour is not new but someone Commvault has gotten too big in the past couple of years to be swallowed up. But this time, it could happen as NetApp is hungry, …. very hungry.

NetApp took a big hit a couple of weeks back, when it announced its Q3 numbers. Revenues fell short of analysts expectations and the share price took a big hit. While its big rival, EMC, has been gaining much momentum on all fronts, it appears that NetApp is getting overwhelmed by the one-stop-shop of EMC. EMC is everything to everyone who wants storage, data protection software, services, data management, scale-out, data security, big data, cloud storage and virtualization and much more. NetApp, has been very focused on what they do best, and that is storage. Everything evolves around their crown jewel, Data ONTAP and recently added Engenio to their stable of storage solutions.

NetApp does not mix the FAS storage with the Engenio and making sure that their story-telling gels but in the past few years, many other vendors are taking the “one-stack-fits-all” approach. Oracle have Exadata, where servers, storage, database and networking in all-in-one. Many others are doing the same, while NetApp prefers a more “loose-coupled” partnerships, such as their “Imagine Virtually Anything” concept partnership with VMware and Cisco, in the shape of FlexPod. FlexPod is a flexible infrastructure package comprising presized storage, networking and server components designed to ease the IT transformation journey–from virtualization all the way to cloud computing.

Commvault would be a great buy (going to be very expensive buy) for NetApp. Things fits perfectly if NetApp decides to abandon its overly protective shield and start becoming a “one-stop-shop” to its customers, starting with data protection. Commvault is already the market leader in the Enterprise Disk-based Backup and Recovery market, and well reflected in Gartner’s Magic Quadrant January 2011 report.

It’s amazing to see how Commvault got to become the leader in this space in just a few short years, and part of its unique approach is providing a common core engine called the Common Technology Engine (CTE). The singular core architecture allows different data management components – Backup, Replication, Archiving, Resource Management and Classification & Search – to share resource and more importantly detailed knowledge of true data management.

In the middle of this year, NetApp had an OEM deal with Commvault to resell their SnapProtect solution, which integrates with NetApp’s SnapMirror solution. The SnapProtect manages NetApp snapshots and SnapMirror replications and also enhances the solution as a tape-out for SnapMirror. Below shows how the Commvault SnapProtect fits into NetApp’s snapshots and SnapMirror data protection architecture.

Sources of NetApp’s C-Level said that NetApp is still very much focused on their ONTAP strategy and with their “loosely-coupled” partnerships with key partners like VMware, Cisco, F5 and Quantum. But at the back of NetApp’s mind, I believe, it is time to do something about it. This “focused” (also could be interpreted an overly cautious) approach is probably seeing the last leg of its phase as cloud computing is changing all that. The cost of integration of different, yet flexible components of storage, data protection and data management components, is prohibitive to cloud service providers and NetApp must take a bolder approach to win the hearts of these providers. Having a one-stop-shop isn’t so bad anymore; it is beginning to make sense and NetApp had better do something quick. Commvault is one of the best out there and NetApp shouldn’t lose that chance.

Note: While the rumours of NetApp and Commvault are swirling, there’s been rumours that Quantum could be another NetApp target.Highroad to Parallel Road

Unless you are working with highly, parallelized access to files in a large scale-out NAS environment, you probably don’t get to work much with Parallel NFS (pNFS). pNFS is part of the NFSv4.1 (RFC 5661) standard, and was introduced in January 2010 to address NFS protocol in the clustered, scale-out NAS environment. It is to provide parallel file access across distributed servers.

pNFS is heavily driven by Panasas, NetApp, EMC, IBM, Sun (now Oracle) among others. And funnily enough, the company that sticks out from the bunch is one that used to tout block storage as the way to go, not files. That’s EMC, the company that more well known for its SAN solutions than its NAS (remember Celerra and IP4700?). And EMC has embraced pNFS in a big, big way. Read EMC’s CTO for Global Marketing, Chuck Hollis’ blog here and here.

However, unknown to many, a lot of the thinking that goes into pNFS are very similar to an EMC product some years ago. That product is EMC Highroad, which in the later years, renamed as Multi-path File System (MPFS).

Note: If you want to know more about the history of HighRoad/MPFS, read this blog.

The cornerstone of EMC MPFS is their File Mapping Protocol or FMP, which is a robust protocol that lines the mapping of files to their addressable blocks in storage. In a nutshell, when I was made responsible for this product during my time at EMC, I used to pitch to companies that MPFS was a file request is through NFS but respond to the requester can be in blocks (iSCSI or Fibre Channel). The beauty of this was, NFSv3 was chatty and heavy but the delivery of data through blocks via iSCSI or Fibre Channel has lesser overhead compared to NFSv3.

Hence the delivery is faster and EMC touted that the performance was 2-4x faster than NFS. Indeed, I have seen some lab tests results from EMC’s work with Schlumberger High Performance Lab in Houston, and the numbers were impressive. I still have them on Powerpoint somewhere.

In circa of 2003-2004, EMC donated the FMP code to IETF and as they say the rest was history.

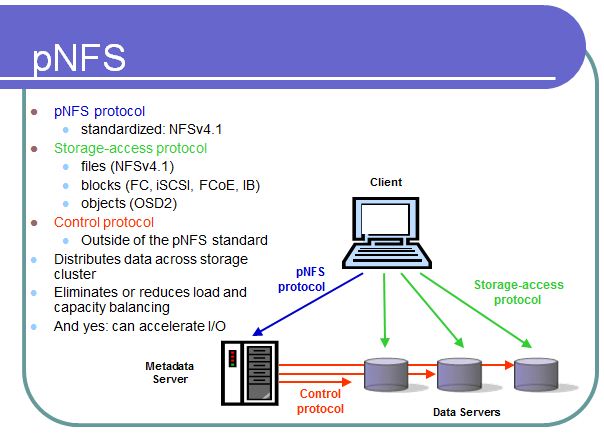

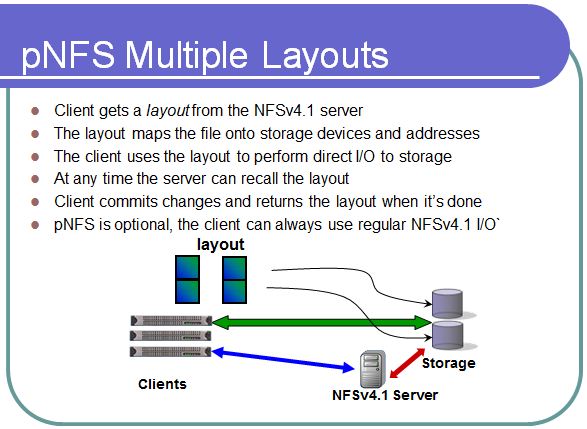

The picture below basically summarizes what pNFS is all about.

A NFSv4 client will basically check with a Metadata server via the pNFS protocol about the layout of the distributed cluster of server. The file, could be striped across multiple nodes of the cluster, and it is the metadata server that provides a map to the client to access the blocks or files from these nodes. This is exactly what the EMC HighRoad/MPFS File Mapping Protocol (FMP) did, mapping the file requests to its respective corresponding blocks. See diagram below:

And one of the powerful feature of pNFS is that it is not just about NFS. The green arrow you see in the above diagram is the storage-access protocol. That access protocol can be NFSv4.1, CIFS, iSCSI, Fibre Channel, FCoE, Infiniband, and Object Storage Device (OSD).

In order to have pNFS working, the NFS client must be NFSv4.1 ready and that code has been made available in Linux and OpenSolaris. Other Unix vendors, no doubt, will be coming out with their NFSv4.1 implementation soon. Oooooh, there will be a Windows NFSv4.1 client coming as well!

But I want to dispel the notion that EMC is a SAN company. EMC is a very strong NAS company and if you have seen the IDC market share (ok, ok, many of you out there will argue about it), EMC is #1 in NAS. And their contribution to pNFS is immense.

Stop stroking your …

A few days after I wrote about the performance benchmark bag of tricks, EMC was the first to fire the first salvo at NetApp’s SPECSfs2008 world records on NFS IOPS.

EMC is obviously using all its ammo to deflate NetApp chest thumping act, with Storagezilla‘s blog. Mark Twomey, who is the alter ego of Storagezilla posted several observations about NetApp apparent use of disk short stroking to artificially boost its performance numbers. This puts NetApp against the wall, with Alex MacDonald (who incidentally is SNIA NFSv4 co-chairman) of the office of the CTO responding hard to Storagezilla’s observation.

The news of this appeared in The Register. Read all about it.

With no letting up, the article also mentioned EMC Isilon’s CTO, Rob Pegler, adding more fuel to the fire.

I spoke about short stroking as some of the tricks used to gain better numbers in benchmark. And I also mentioned that these numbers have little use to the real work and I would like to add that these numbers are just there for marketing reasons. So, for you readers out there, benchmark is really not big of a deal.

Have a great weekend!

Performance benchmarks – the games that we play

First of all, congratulations to NetApp for beating EMC Isilon in the latest SPECSfs2008 benchmark for NFS IOPS. The news is everywhere and here’s one here.

EMC Isilon was blowing its horns several months ago when it hit 1,112,705 IOPS recorded from a 140-node S200 cluster with 3,360 disk drives and a overall response time of 2.54 msecs. Last week, NetApp became top dog, pounding its chest with 1,512,784 IOPS on a 24 x FAS6240 nodes with an overall response time of 1.53msecs. There were 1,728 450GB, 15,000rpm disk drives and the FAS6240s were fitted with Flash Cache.

And with each benchmark that you and I have seen before and after, we will see every storage vendors trying to best the other and if they did, their horns will be blaring, the fireworks are out and they will pounding their chests like Tarzan, saying “Who’s your daddy?” The euphoria usually doesn’t last long as performance records are broken all the time.

However, the performance benchmark results are not to be taken in verbatim because they are not true representations of real life, production environment. 2 years ago, the magazine, the defunct Byte and Switch (which now is part of Network Computing), did a 9-year study on File Systems and Storage Benchmarking. In a very interesting manner, it revealed that a lot of times, benchmarks results are merely reduced to single graphs which has little information about the details of how the benchmark was conducted, how long the benchmark took and so on.

The paper, published by Avishay Traeger and Erez Zadok from Stony Brook University and Nikolai Joukov and Charles P. Wright from the IBM T.J. Watson Research Center entitled, “A Nine Year Study of File System and Storage Benchmarking” studied 415 file systems from 106 published results and the article quoted:

Based on this examination the paper makes some very interesting observations and conclusions that are, in many ways, very critical of the way “research” papers have been written about storage and file systems.

Therefore, the paper highlighted the way the benchmark was done and the way the benchmark results were reported and judging by the strong title (It was titled “Lies, Damn Lies and File Systems Benchmarks”) of the online article that reviewed the study, benchmarks are not the pictures that says a thousand words.

Be it TPC-C, SPC1 or SPECSfs benchmarks, I have gone through some interesting experiences myself, and there are certain tricks of the trade, just like in a magic show. Some of the very common ones I come across are

- Short stroking – a method to format a drive so that only the outer sectors of the disk platter are used to store data. This practice is done in I/O-intensive environments to increase performance.

- Shortened test – performance tests that run for several minutes to achieve the numbers rather than prolonged periods (which mimics real life)

- Reporting aggregated numbers – Note the number of nodes or controllers used to achieve the numbers. It is not ONE controller than can achieve the numbers, but an aggregated performance results factored by the number of controllers